Microsoft Azure for SQL Server Professionals

How Azure Synapse integrates your data pipelines

Tech on Fire: Take a Tour of Cosmos DB

Azure Resources: Saving Time And Money

Scripting Azure Synapse Analytics

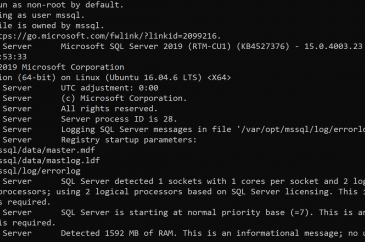

Automating SQL Server 2019 Docker Deployments

How to Avoid a Docker Database Disaster

Survey of the Azure Data Storage Landscape

Migrating Your Enterprise Application to the Cloud – Your Database Isn’t the Problem, Your Data Model Is

For CTOs looking to squeeze new life out of legacy enterprise applications, the cloud offers tantalizing prospects. Pushbutton scalability, reduced capital costs, outsourcing of non-core IT functions, potentially greater monitoring and health management capabilities and almost certainly greater overall uptime… even with the potential downsides, its no wonder senior management is tempted.

And yet those downsides are more than nagging problems; in many cases they pose significant barriers to a successful move from private data center to public cloud. An existing enterprise app might work fine running on internal hardware, with a modest user base… but move it blindly to a VM in Azure or AWS and suddenly that clever little accounting app grinds to a halt (as does your business). But why is that, exactly?

Where’s The Rub?

There are many potential difficulties to overcome when migrating an existing enterprise app to the cloud: reliance on past-generation (read: potentially unsafe) database or file-system drivers that might be ill-suited (or incompatible with) your chosen cloud stack, legal or regulatory requirements that mandate where the data lives, preconceptions about ambient hardware or network infrastructure baked (inadvertently, or otherwise) into your software, security contexts or sandbox privileges required for successful operation that may not be recommended best practices in the cloud, etc. Any of these (and many more) can trip up a migration effort. But there’s one incompatibility so pervasive that its worth discussing further, on its own. Its origins largely pre-date cloud computing itself, in fact. But the negative effects haunt us now, and we’ll likely continue to deal with them for years to come.

It’s your application’s data model.

It’s not the data itself… even if you’ve got a lot of that, there’s plenty of room to store it all in the cloud, if you want. And it’s not the application code per se, though it’s likely that you’ll need to change at least some of that to maximize the full potential of your cloud-hosted application.

No, what I’m talking about is the original conceptual model used to define the database underpinning your application. This model was probably created a long time ago… perhaps you paid a lot of money to a database architect who studied your requirements and used tools like Erwin or ER/Studio to make complex graphical depictions of tables and relationships, or maybe the model was defined by developers in code using APIs like Entity Framework or NHibernate. In either case, you could likely sit down with a developer on your team and have them walk you through the model, and you’d see elements of your business domain that you recognize… a Customer table, defined relationships between an Address and a Warehouse, etc. And this would seem logical and reasonable to you… the application performs some vital function for your business, as part of that it manipulates data, that data needs to live somewhere… voila! Here it is… in the database, created from this model.

A Minor Assumption, With Major Implications

The problem is that this model almost certainly has one very big assumption baked into it… it assumes there will be one physical database created from the model, and that all the data will live there. It is by definition a relational model… the concepts modeled within and their relationships (their “referential integrity”) can only be reliably maintained if the data is reasonably co-located, such that the database process can enforce transaction boundaries, data staleness and visibility rules, update query indexes as data changes render them obsolete, process complex joins across multiple tables, etc. Relational databases do not (cannot) reliably do these things across multiple machines. For a more detailed, nerdy explanation of why this is so, see CAP theorem.

In short, a relational model is predicated on the existence of One Giant Database. And unfortunately, sooner or later that single machine is doing as much work as it can do, but you need more. And now you have One Giant Problem.

To be clear, this isn’t really a cloud-specific issue. Any computational- or data-intensive resource (cloud-based or not) will eventually saturate. At that point, you have two options: scale up (buy a bigger server) or scale out (buy more servers). If the resource in question is an application server, either option (assuming your application architect is competent and anticipated scale-out scenarios) can work. But if the resource is a traditional relational database, you really only have two options: scale up and hope it’s good enough, or re-architect for scale-out. Sharding is sometimes a possible third option, sometimes a manifestation of the second… it has it’s place, but also enough drawbacks to make it unsuitable for the general case.

Scale up… or out… or ?

So for relational database scalability issues, scale up is usually the first consideration. More memory, more processors, more and faster disks… these will help your application serve more requests and handle more users, for a time. But you eventually bump up against the laws of physics. There is only so much RAM, CPU, and disk I/O you can bake into a database machine (physical or virtual, cloud-based or not). And even if your data access needs are within reach of the current technological state of the art, they may not be within reach of your budget (a quick perusal of AWS hosted database pricing shows a range from less than 2 cents/hour to over $7.50/hour… cha-ching!).

And so you’re left with the option of re-architecting for scale out. Scale out has two significant advantage over scale up. First, it’s theoretically unbounded; you can keep adding more servers forever. In practice this isn’t even remotely true, as life and software architecture inevitably intrudes and poses some actual upper bound. But still, it’s reasonable to say that a properly architected enterprise application can scale out much, much further than it can ever scale up. The second advantage is cost; scaled out solutions can be done incrementally, and with commodity hardware. This affords you the opportunity to purchase as much scalability as you need at any given moment in time. Scaled up solutions require the use of ever-more-expensive hardware resources, and perhaps worse, necessitate that existing resources must be retired or repurposed… with scale up, you can’t aggregate hardware to work cooperatively (which is exactly what happens in scale out).

But the big disadvantage of scale out is that you have to plan for it, architect for it, and choose technologies that enable it. And there’s the core issue with relational models and scale out; a relational model, and a database created from it, and likely the code written to work with it, are all fundamentally incompatible with any plan to scale out arbitrarily (darn that referential integrity!). Something will have to change, and that something will cost you time and money. There are limited options in products like SQL Server and Oracle for clustering a handful of machines together, but these tend to be used more in service to failover/reliability than pure scalability/availability needs.

A Storage Model Is Not a Domain Model

So, fine then… relational databases are incompatible with the preferred means of scaling cloud-based software (meaning, scale out). Relational models are poor but frequently used tools for modeling business domains, with significant negative implications for future scalability of the affected applications. But how did this happen? Didn’t we see this coming?

Sure we did. For years, smart people have implored us to stop using (relational) database models as the blueprint for software implementing non-trivial business processes. We just didn’t listen. Our tools (cough Entity Framework cough) make it easy to go from database to code, and while things like EF code first provide us with other modeling alternatives, many applications are still constructed bottom-up from a relational database model. Guess what? If you start with a monolithic relational model and auto-generate EF code to talk to that model, your EF code isn’t any more cloud-ready than your database is (to be clear, I like EF and think it’s entirely appropriate for use on constrained subsets of an otherwise large model, even in the cloud… it’s the naïve use of huge, monolithic EF models that I object to).

“But we’ve always done it this way.” Sure we have. In fairness, that’s not entirely our fault… the skills and tools needed to create a proper domain model independent of a dedicated storage model have for various reasons not yet gained broad traction. The path of least (initial) resistance is to start with a database and build upwards. Legions of enterprise developers have written code like this for years (decades?) and still do. I like to think we’re slowly moving beyond this, and I have high hopes for things like Domain-Driven Design, microservices architectures, and polyglot persistence as some of the practices and patterns that will help us break the cycle. More on that in my next post. But for now, we’re still a long way from industry-wide enlightenment.

Your Technical Debt Is Now Past Due

We’ve kicked this relational modeling can down the road for a long time, because we could. In a world of small private data centers, modest departmental application needs and manual, Excel-driven business processes, relational databases with relational CRUD-style code on top and built from relational models are not always great but are often good enough. It’s when our ambitions grow, and our anticipated use of these creaky enterprise apps grows along with them, that our best laid plans face the harsh reality of the technical debt we’ve incurred.

You want to move your IT infrastructure to the cloud? You want a more elastic, robust, flexible, agile infrastructure upon which to run your business? That’s a valuable goal. The cloud can give you that, and more. But make plans to retire that technical debt first.

In my next post, I’ll explore ways to do just that… we’ll talk about migration strategies for existing applications, and also touch on ways to minimize that technical debt in the first place.

Until then…